Five Questions for State Boards to Ask about AI in Schools

Generative artificial intelligence (GenAI) draws on vast troves of data to create new content that mimics human forms of thinking, processing, and creation. Alongside an unprecedented rate of adoption across all sectors, GenAI is reshaping education systems in unprecedented ways. The technology is a double-edged sword, creating tremendous opportunities but also posing considerable risks and challenges for schools.

1. Why should our state adopt or review policies and frameworks to guide the use of AI in schools?

Teachers, students, school leaders, and staff are already using AI tools, which are readily accessible. Yet in many places this uptake in use for education purposes occurs in a policy vacuum, and teachers and leaders are increasingly seeking state guidance. Because they influence state education governance and shape policies on adoption, resistance, or regulation of technology, state boards of education are well positioned to help fill this vacuum. They play a crucial role, leveraging their power to interrogate what happens in the integration of technology in education, how it happens, and why.

UNESCO highlights four categories of potential applications of AI in education, each of which carries potential benefits and risks:

- education management and delivery (e.g., scheduling, learning analytics, educational chatbots);

- learning and assessment (e.g., intelligent tutoring systems, dialogue-based tutoring systems, AI-supported reading and language learning);

- empowering teachers and enhancing teaching (e.g., monitoring discussion forums); and

- lifelong learning (e.g., AI-driven personalized lifelong tutor).[1]

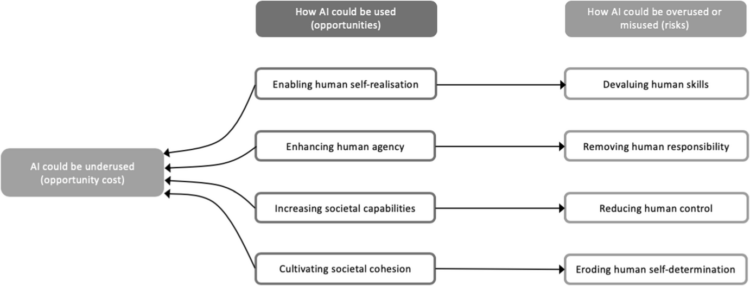

Although GenAI opens doors for innovation in teaching and learning, state policymakers must also mitigate the risks.[2] State policymakers should consider how AI developments affect teaching and learning, including the opportunity costs of its overuse, misuse, or underuse: the cognitive offloading of tasks or the loss of human agency, for instance (figure 1). They also must pay attention to issues around privacy and data use, data bias, and copyright compliance. For example, if data that is biased or contains stereotypes is used to train an AI tool, its output will perpetuate those stereotypes and biases. If its training material includes copyrighted images or text obtained without permission from the creators of the material, copyright violations may be amplified.

Although GenAI opens doors for innovation in teaching and learning, state policymakers must also mitigate the risks.

Figure 1. Opportunities and Risks of AI Integration

At the same time, policymakers should develop clear yet flexible frameworks that support innovation and enable schools to take advantage of the many benefits of the technology through responsible applications.

2. How are leading states addressing AI in K-12?

Realizing AI’s dual potential for benefit and risks, state policymakers are already responding: 32 US states have crafted AI-related frameworks for K-12 schools,[3] with Oregon and California among the first to do so, in 2023. Most of the states released guidance in 2024, and Georgia, Maine, and Alaska are among those following suit in 2025.

[Thirty-two] US states have crafted AI-related frameworks for K-12 schools, with Oregon and California among the first to do so, in 2023.

Yet the policy landscape remains underdeveloped. Our recent survey of NASBE members revealed that states are in the early stages of addressing GenAI, with most yet to implement official policies. While many states are providing guidance or toolkits or are starting to write state policies, local decisions dominate the landscape, with each school district primarily responsible for shaping its own policies. One NASBE respondent shared that state implementation had been discussed in their state, but “[f]or now, local school districts are determining policies or guidelines.”

Surveyed NASBE members agree that it is critical for states to define acceptable AI use and establish clear parameters for students and teachers through practical, responsible policy and guidance development and implementation. “That’s number one,” a member said. “When we look at lawsuits that are starting to happen around AI, especially around plagiarism, the [issue] is there wasn’t a policy in place to enforce.” Survey participants raised questions about effective training for staff, data privacy and security, GenAI’s potential to support learning, varied policy models tailored to local districts’ needs, and plagiarism. One participant asked how educators will be able “to tell the difference between student-generated work and AI? Is AI impeding or helping learning, and if so, in what way?”

It is critical for states to define acceptable AI use and establish clear parameters for students and teachers through practical, responsible policy and guidance development and implementation.

GenAI advanced rapidly after the first ChatGPT tool emerged in 2022, and states began studying its impact on K-12 soon thereafter, as Illinois did in 2023.[4] State leaders convened the Generative AI and Natural Language Processing Taskforce, an initiative of its Department of Innovation and Technology. Taskforce members included representatives from the office of the state superintendent, community college board, higher education board, and the state board of education as well as teachers and principals. Subject-matter experts briefed the working groups in a series of meetings. The culminating report included recommendations from the K-12 Education Working Group, which focused on the responsible integration of GenAI into K-12 classrooms through ethical use, equitable access, and teacher training.[5]

All emphasize ethical uses and safety. For example, Wisconsin’s K-12 guidance is intended to help K-12 educators, librarians, students, and administrators integrate AI effectively and responsibly.[6] It stresses adopting a human-centered approach to AI application; updating guidance to keep it current with the rapidly shifting federal and state policy landscape; and building understanding of the impacts of GenAI’s visual, text, and video output. For example, deepfakes are images or videos in which one person’s image or voice is substituted for another without consent, and GenAI markedly eases their creation. One 2024 survey found that 40 percent of students were aware of peers in their schools who became deepfake targets.[7] Especially when they are created as part of a cyberbullying incident, deepfakes can be traumatic for young people.[8]

Given the rapid pace of AI innovation, state education leaders must remain responsive and proactive. Following the lead of early adopters like Illinois and Wisconsin, other states must develop guidance that prioritizes ethics, advances equity, ensures safety, and adapts to changing needs. States may also consider creating a dedicated position for an AI education specialist who can lead K-12 AI initiatives and provide professional development for teachers, as the Utah State Board of Education has done. To ease the burden on local districts, states should take a leading role in establishing clear, practical, and adaptative frameworks to guide schools in navigating the opportunities and risks of AI in K-12 education.

3. Are there guiding principles for AI frameworks in education? What are they, and how are they cultivated?

Three guiding principles animate good frameworks. 1) Model policies involve many stakeholders, from across many disciplines, in their creation. 2) They center student well-being. 3) They address ethical, transparent, responsible use of GenAI, urging users to comply with existing laws and policies to protect intellectual property, academic integrity, and data privacy.

Cross-pollination. Wise policymakers recognize that traditional approaches will likely prove inadequate to address GenAI in education. Instead, they opt for iterative approaches that draw ideas and innovations from multiple disciplines, with many stakeholders informing policy development and refinement. Parents, students, technology experts, learning scientists, psychologists, lawyers, and the education community are all critical voices that must be heard. In listening tours and other forums, board members can seek diverse perspectives and a cross-pollination of ideas to inform dynamic responses. Such input is needed to “really understand … the trajectory of the field,” one NASBE member said. “Trying to be able to look downfield a little bit is a really important skill, because this is happening so fast…. So it’s a couple of things: identifying good aggregators whom you trust [and] cross pollinating with your colleagues both within the state [and] across states.”

Wise policymakers … opt for iterative approaches that draw ideas and innovations from multiple disciplines.

Human Flourishing. Policymakers should ensure that guidelines prioritize key principles for ethical implementation, such as equity and student welfare. “There are these technical harms and these broader harms,” said one NASBE member. “One of my big concerns is this idea, ‘Oh, we don’t need to teach writing.’ Writing is thinking, and you learn it through … productive struggle.… There are certain kinds of productive struggles that are really important to us as humans in terms of how we learn.” In particular, the ethical and societal impacts of computing should be foundational in computer science courses, with students exploring how the tools they develop impact human flourishing. The Principled Innovation Framework at Arizona State University is intended to guide educators and others in incorporating moral, civic, performance, and intellectual principles such as curiosity and integrity into technology use and creation.[9]

Responsible Uses. Good GenAI policies will embrace accessible, equitable opportunities for students and teachers to learn how to use the technology productively and appropriately. AI literacy education must be designed to meet the needs of diverse communities, including students with disabilities and English learners.

Catherine Adams, a professor and researcher at the University of Alberta, and her colleagues synthesized a set of ethical principles for AI in K-12 education that they derived from several existing international frameworks:

- Transparency. Stakeholders understand how the AI tool makes decisions; users understand what can be expected from interacting with AI.

- Justice and Fairness. Equitable, inclusive, and ethical AI use, with a particular focus on nondiscrimination and fairness.

- Nonmaleficence. Avoidance of harm and maintaining children’s safety, security, and integrity of their human rights and freedoms.

- Responsibility. Clarity regarding ethical responsibility, liability, and accountability in AI tools used with children.

- Privacy. Protecting children’s data, along with allowing teachers, students, and parents to audit and trace data usage.

- Beneficence. Policy support for students’ well-being, development, and growth, aligned to developmental science.

- Freedom and Autonomy. Informed participation and learner-centered AI use that promotes students’ agency over their learning.

- Pedagogical Appropriateness. Alignment with educational goals, application of ethical principles, use of evidence-based practices, and supportive learning environments.

- Children’s Rights. Use of AI to empower children and the institutions that support them.

- AI Literacy. Public education on AI use, integration of AI skills into curricula, and preparation of students to use AI after they graduate and enter the workforce.[10]

4. In a rapidly changing landscape, how can state AI guidance and policies remain relevant and useful?

Although the technology is already reaching millions of students, much remains unknown about its impact on learning outcomes, social-emotional well-being, attitudes toward teaching and learning, graduation rates, and school system finances, to name but a few. Will AI lead to greater personalized, adaptive learning experiences for all learners? Will it perpetuate biased approaches to teaching and learning, leading to detrimental outcomes? Will it enhance educators’ work life? Will schools and districts use AI to justify budget cuts? Coordinated research, implementation, and policy efforts will help identify and mitigate implementation barriers and navigate the ethical impacts of AI in education.

The National Education Lab AI (NOLAI) for elementary, secondary, and special needs education in the Netherlands is a promising consortium-based approach to ensuring coordinated research and implementation. Comprising education, business, and science partners and funded by the National Growth Fund, NOLAI researches and co-develops dozens of projects with Dutch schools, driven by the schools’ questions on responsible educational AI. One project developed an AI-based environment that lets students visualize their curriculum-aligned math learning goals. Another, the Co-Teach Computer Science platform, gives students personalized support for learning computer science while teachers gain instructional support. Such context-based, participatory research is a powerful way to pilot and explore AI implementation in real time in actual classrooms and schools. Embedded research and practice are powerful drivers for ensuring that guidance and policies remain relevant, responsive, and grounded in evidence and data.

Embedded research and practice are powerful drivers for ensuring that guidance and policies remain relevant, responsive, and grounded in evidence and data.

State boards and higher education institutions can partner to conduct research and thereby generate critical insights to advance uses of AI in their own educational contexts. They may convene a working group, task force, or committee of experts from education, learning sciences, technology, researchers, practitioners, and administrators to collect data on AI trends in the state and give the board recommendations on policy, funding, and guidance. They may explore GenAI at annual retreats or study sessions and work closely with their departments of education to stay informed on the latest adoptions and guidance to schools and districts. Engaging directly with AI tools will allow board members to stay abreast of new developments and become more aware of the tools’ opportunities, limitations, and biases. Armed with this knowledge, they can recommend enhancements to state AI education guidance, frameworks, and professional development for educators.

Understanding AI implementation in schools will continue to be critical to ensuring the relevance of state guidance. As a NASBE member shared, “You can’t just trust AI to … write a lesson and it’s going to be great. You’ve got to know. You’ve got to go through it … to be [able to say], ‘Here are the parts of this that are actually great, and here are the parts that need work.’ ”

Understanding AI implementation in schools will continue to be critical to ensuring the relevance of state guidance.

As Washington state’s guidance put it, smart integration of AI in schools begins with policymakers understanding that AI is “not a replacement for human intelligence or humanitarian presence in education.”[11] A human-centered approach to AI integration, from the initial design and development of AI initiatives through their implementation and evaluation, will help ensure that these efforts are inclusive, safe, secure, accountable, and transparent.

There are many resources to help policymakers as they grapple with decisions of tremendous consequence: The Generative AI for Education Hub at Stanford University houses research on how GenAI affects students, schools, and learning; the Council of Great City Schools and education technology association CoSN issued a GenAI readiness checklist; the Massachusetts Department of Elementary and Secondary Education has a GenAI hub; CoSN and CAST have released AI and accessibility guidance; Bellwether releases AI-related insights and publications; and Common Sense Media has published AI Risk Assessments.[12] State board members can also amplify the need for a state-funded consortium of industry, academia, and K-12 partners to pilot and evaluate AI projects.

5. What resources and professional development do our educators need to support their own AI literacy?

GenAI is ubiquitous, but knowledge on how to deploy it effectively in education is not. “Where we’re seeing the most promising things is when a teacher takes a very purposeful pedagogy that’s embedded in their state standards with great content and then applies AI to it in a really creative way,” one NASBE member said. Regardless of their varied contexts, every educator needs foundational knowledge on how to apply technology to their work. It will take intentional, concerted effort to ensure that all educators gain AI literacy through experiences that build their proficiency in algorithmic problem solving, computational thinking, and their understanding of AI’s ethical and societal impacts.[13]

GenAI is ubiquitous, but knowledge on how to deploy it effectively in education is not.

To be prepared to engage in a computer-powered world, all students must learn about AI. In partnership with AI4K12, the Computer Science Teachers Association articulated AI content and skills that are important for all students.[14] The project parses foundational AI learning outcomes into five categories: humans and AI, representing and reasoning, machine learning, ethical AI system design and programming, and societal impacts of AI. Curricula such as Experience AI, from the Raspberry Pi Foundation, and AI + Ethics, from MIT Media Lab, are grounded in approaches that center ethics. Google’s Teachable Machine and MIT RAISE Playground can be integrated into middle school, where students can engage in interactive experiences of training classifiers that will prepare them to be critical consumers who can evaluate and apply AI in thoughtful ways.

Perhaps most important, human values and agency should drive the application of GenAI—not the other way around. People should determine the course of AI integration. State boards should work to ensure that all stakeholders are collectively building the learning environments that students need to learn and thrive. Understanding and applying AI transparency in a comprehensive way is a critical component of teachers’ AI literacy. When considering transparency through the lens of safety, educators can ensure that precautions have been taken to mitigate the harmful effects of AI. Explainability means that educators understand how the AI system works. Implementing AI in a fair way in classrooms means that teachers understand the biases that exist in AI tools and account for these when integrating them, particularly with their students with disabilities and those whose primary language is not English. When considering accountability, teachers and their school and district leaders must be aware of who is accountable for the mistakes of an AI system. When teachers apply interpretability in the classroom, they ensure that the explanations AI provides are clear to their students. By grounding AI integration in principled innovation with a commitment to human flourishing, both educators and students can become agentic users of AI as they engage with these tools thoughtfully, critically, and responsibly to support inclusive learning environments.

Janice Mak is a researcher and faculty member at Arizona State University and former Arizona State Board of Education member. She serves on Association for Computing Machinery’s Education Advisory Committee and co-chairs their Ethical and Societal Impacts of GenAI in Education task force. Carolina Torrejon Capurro is a teaching assistant professor at Oklahoma State University’s School of Educational Foundations, Leadership, and Aviation. The work was supported by Google and the Spencer Foundation.

Notes

[1] Fengchun Miao et al., “AI and Education: Guidance for Policymakers” (Paris: UNESCO, 2021).

[2] Julia Kaufman et al., “Uneven Adoption of Artificial Intelligence Tools among US Teachers and Principals in the 2023–2024 School Year,” research report (Rand, 2025).

[3] Jenny McCann, “How States Are Responding to the Rise of AI in Education,” blog (Education Commission of the States, June 17, 2025).

[4] Illinois Public Act 103-0451.

[5] Illinois, Report of the Generative AI and Natural Language Processing Task Force (December 2024).

[6] Wisconsin, Governor’s Task Force on Workforce and Artificial Intelligence, “Advisory Action Plan” (July 2024).

[7] Elizabeth Laird, Maddy Dwyer, and Kristin Woelfel, “In Deep Trouble: Surfacing Tech-Powered Sexual Harassment in K-12 Schools,” report (Center for Democracy and Technology, September 2024).

[8] Sandra Anna Laczi and Valéria Posér, “Navigating Children’s Cybersecurity Landscape: Understanding the Impact of Cyberbullying, Online Harassment, and Identity Theft on Children,” paper for international symposium (IEEE, 2024).

[9] See “Principled Innovation,” website.

[10] Catherine Adams et al., “Ethical Principles for Artificial Intelligence in K-12 Education,” Computers and Education: Artificial Intelligence 4 (2023), table 1, https://doi.org/10.1016/j.caeai.2023.100131. The authors draw on frameworks produced by the World Economic Forum (2019), the Institute for Ethical AI in Education (2021), UNESCO (2021), and UNICEF (2021).

[11] Washington Office of Superintendent Public Instruction, “Human-Centered AI Guidance for K-12 Public Schools,” version 3.0 (July 1, 2024).

[12] Generative AI for Education Hub, website; Council of the Great City Schools and CoSN, “K-12 Generative AI Readiness Checklist (October 2023); Massachusetts Department of Elementary and Secondary Education, “Artificial Intelligence in K-12 Schools,” web page; Fernanda Pérez Perez, “AI & Accessibility in Education,” Blaschke Report (CoSN and CAST, 2024); Amy Chen Kulesa et al., “Learning Systems: Shaping the Role of Artificial Intelligence in Education,” web page (Bellwether, June 25, 2025); Common Sense Media, “AI Risk Assessments,” web page.

[13] Nataliya Kosmyna, “Your Brain on ChatCPT,” overview (MIT Media Lab, N.d.); Janice Mak, W. Wen, and Marissa Castellana, “Examining Integration of Ethics in Primary AI Curricula,” in Advances in Artificial Intelligence in Education (Springer, forthcoming, 2025); Janice Mak, Marissa Castellana, and W. Wen, “Evaluating AI Teacher Training Curricula through a Lens of Equity, Ethics, and Justice,” In Teaching with Artificial Intelligence: A Guide for Primary and Elementary Educators (Routledge, forthcoming 2025).

[14] Computer Science Teachers Association and AI4K12, “AI Learning Priorities for All K-12 Students,” project (New York: Computer Science Teachers Association, 2025).

i

i

i

i

i

i